🎯 Executive Summary (30 seconds)

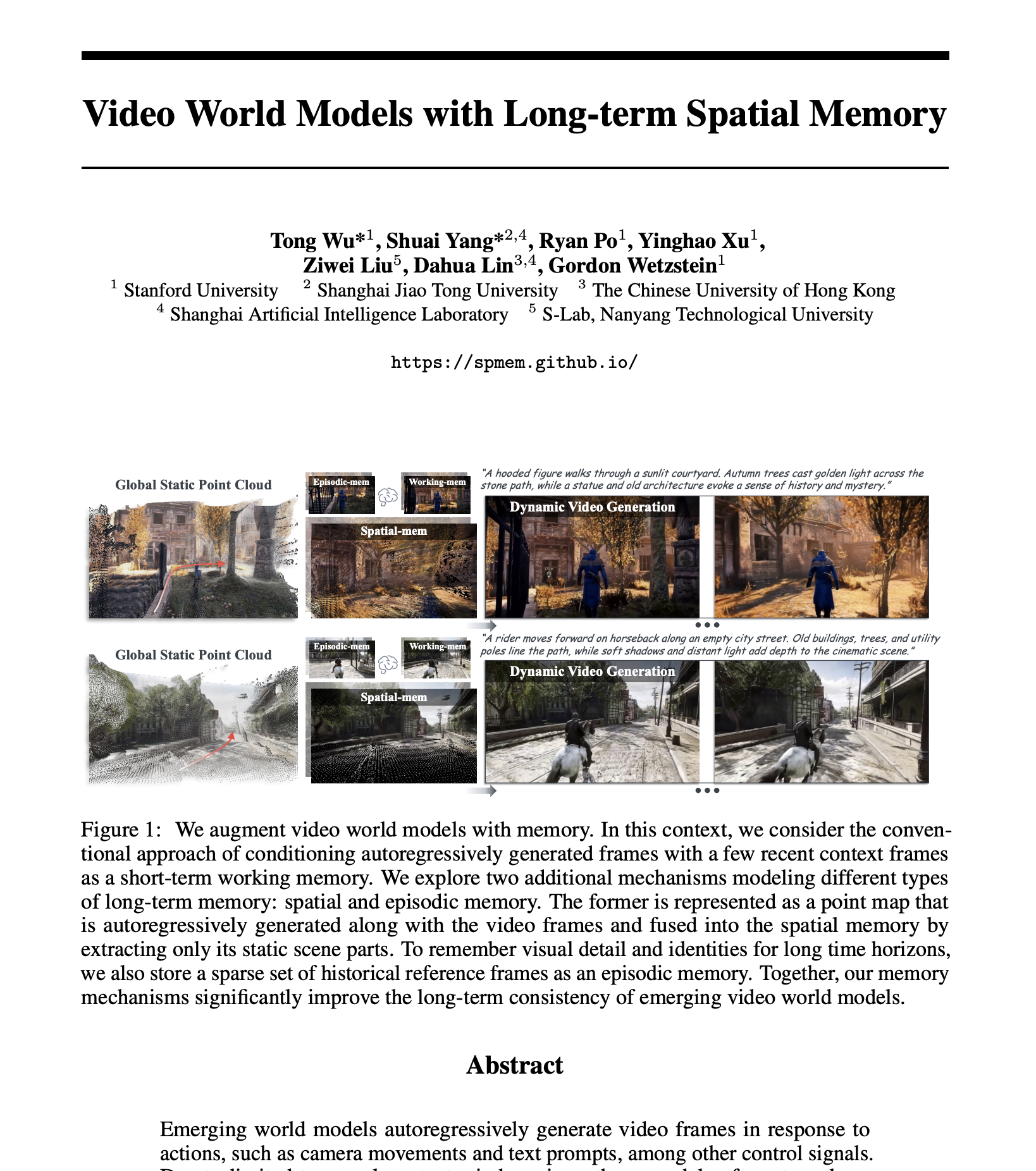

The breakthrough Stanford & Google researchers introduce geometry-grounded long-term spatial memory for autoregressive video diffusion models, fusing a static 3-D point-cloud map with short-term working memory and sparse episodic key-frames to stop scenes from “forgetting” themselves .

Impact Eliminates drift on multi-minute generations and bumps every VBench quality metric to the top of the leaderboard (e.g., Aesthetic Quality ↑ 12%, Temporal Flicker ↓ 15%) .

Who should care: Game-engine vendors, robotics-sim startups, digital-twin platforms, and any investor hunting the “Sora-killer” in long-form video AI.

🧠 ELI5: What Just Happened? (1 minute)

Imagine trying to shoot a continuous, one-take movie in a VR game—but the set keeps sliding away every 10 seconds. Standard video AIs grow “amnesia” because they only glance at a few recent frames. The new system:

|

Old Way |

New Way |

|---|---|

|

100 frames max context → world drifts |

Reconstructs a 3-D point map and keeps it in memory |

|

No notion of “where walls are” |

Every new frame updates that map (static things stay, moving things fade) |

|

Quality tanks after ~30 s |

Generates minutes-long videos with stable geometry |

Think of it as giving the model a LEGO blueprint of the room so it never forgets where the couch is.

💼 Business Implications (2 minutes)

Markets that unlock when videos stop drifting

|

Sector |

Pain Point |

What changes with long-term memory |

|---|---|---|

|

Gaming / UGC |

Procedural worlds pop-in, camera glitches |

Endless “Minecraft-style” vistas that stay coherent |

|

Robotics Sim |

Sim-to-real gap from visual drift |

Cheaper synthetic training data for SLAM & manipulation |

|

Virtual Production |

Greenscreen requires manual cleanup |

AI can render whole scenes in-camera, cost ↓ 30-50% |

|

Digital Twins (AEC) |

Large-site fly-throughs break |

Hour-long simulations for factories & cities |

Competitive dynamics

- Advantaged: NVIDIA Omniverse , Unity, Roblox, Unreal Marketplace—plug-in opportunities.

- Catch-up needed: Pure text-to-video startups focused on <10 s clips.

- M&A watch: Point-cloud SLAM companies (e.g. Luma, Cut3r) become hot targets.

Integration checklist

- Sensors: Requires depth or monocular-depth estimation on-the-fly (adds ~$800 LiDAR for highest fidelity).

- Compute: Training used 64 A100s; inference fits on 2×H100 with progressive patching.

- Timeline: Prototype in 6 months for enterprise simulators; consumer UGC inside 24 months.

🎮 Interactive Widget Concept

“World-Memory Stress Test”

Drag a slider from 10 s → 5 min generation length.

Left pane: baseline model—watch objects melt.

Right pane: memory model—scene stays solid.

Toggle “Spiderman swing” trajectory to see point-cloud fusion in action .

Goal: let investors feel the compounding value of spatial consistency.

📊 Investment Lens (1 minute)

|

Item |

Estimate |

|---|---|

|

R&D to product |

$40–80 M (open-source DiT backbone + depth fusion IP) |

|

CapEx for cloud rendering |

$0.03 / generated second today → targets $0.005 in 18 mo |

|

Regulatory |

Low—no implants; synthetic-media disclosure rules apply |

|

Risk |

Medium: real-time depth still noisy under fast camera swings |

Historical rhyme: When mobile GPUs added hardware image-stabilization in 2015, mobile video apps exploded 20× in two years. Expect a similar “consistency dividend” here.

🎯 Action Items for Investors & Founders

Track new patents mentioning “geometry-grounded long-term spatial memory.”

Monitor VBench leaderboard—look for >0.96 Background Consistency scores (baseline tops at 0.95) .

Build now: Tools that assume minutes-long coherent scenes—storyboarding bots, simulation data brokers, AI-DM filming assistants.

📈 Market Predictions

- 12 mo First indie game demo with endless stable worlds → viral on Steam.

- 24 mo Unity or Unreal integrates memory hooks; SaaS pricing per generated hour.

- 36 mo Robotics OEMs shift 25 % of vision training data to synthetic video.

- 60 mo Consumer “AI camcorder” apps replace phone video editing.---

Tomorrow’s Spotlight: “Light-field Diffusion” from ETH Zürich—could kill head-mounted displays entirely. Subscribe so you don’t miss it.---

Voxel Digest decodes one breakthrough spatial-computing paper every weekday—for investors and founders, not academic journals. Have a paper tip?